Detect lines in an image using JavaScript

A coworker came to me last week asking if by chance I knew how to algorithmically detect the corners of a piece of paper whose picture was taken by a smartphone. Actually, this problems is best solved by detecting the edges of the paper, corners being the intersection of such lines…

Disclaimer: while the implementation in its current state is not fully optimized nor taking advantage of parallel web workers, the results it provides are already close to final. At the end of this blog post, I will discuss proposals aiming at improving this solution, but I’ll let those as an exercice for the reader ;-)

With that said, let’s start with some pictures! Here is the original picture used in the tests:

Detecting the edges

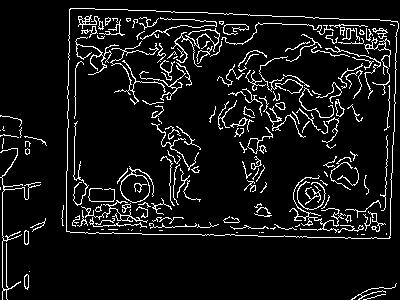

The first step is to use an edge detection algorithm to simplify this picture. The method I used here is the Canny Edge Detection implemented in FeatJS. Here’s what it gives me:

The idea of edge detection is to remember pixels that are very different to their neighbors, which can be done by computing the difference between an image and itself shifted by 1 pixel in any direction.

To avoid too many false positives, the image is blurred first, so that only strong edges will remain visible. Some lines may be too big after this change, so edges that are too tick are thinned to 1px by using a filter.

Detecting the lines

The second part of the problem is to find out what points in this image are aligned and form lines. A simple method to solve this problem is, for each white pixel, to enumerate all the lines this pixel can be part of, and increase their likelyhood.

Lines that only cross a few points will get their likely hood increased a bit, while lines that go through a lot of points will see their likelyhood increase a lot. We just need to find the maximum values in the generated likelyhoods to find out which line are the most likely.

In our case, since we can assume we are looking for a rectangle that is mostly centered along the axis of the picture, we can attempt to find the peaks that corresponds to the four biggest types of lines (let’s keep the 3 best candidates for each):

- Horizontal at the top of the picture

- Horizontal at the bottom of the picture

- Vertical at the left of the picture

- Vertical at the right of the picture

Doing so for our original picture works pretty well, but the “most likely result” (black lines) is not the shape we were expecting (the vertical left line we want is only 2nd in likeliness):

Removing the noise

The problem is that our solution is too friendly with noise, and any point one the path of a line ends up incrementing its likelihood, even if the point is part of another line visually (or part of no line at all).

The Hough Transform tends to find too much line the more noise there is, so a solution would be to remove the noise.

The solution I choose is to apply the Hough Transform on small slices of the original picture, and try to detect (horizontal or vertical) lines in these small slices.

Because the slices are small, we can be very strict in what it means for a line to be there. In this case, I added the fact that black points that have no white neighbor diminish the probability of the lines that go through them in addition to having white points increment likelihoods. That biais us towards lines that cross the slice almost entirely.

Once I found the very likely lines for the slice, I just erase any point of the slice that is not part in any of those lines. Here is the picture after this filter:

Applying the Hough Transform on the whole picture again yields the correct result this time:

Finding the most likely “rectangle”

It is still kinda stupid to rely on each line taken separately to define the resulting shape of the piece of paper. Indeed, the shape must be determined using a combination of four of these lines, one in each bucket. Hoping the first one of each will also be the right one is too hopeful.

A final step that I did not implement would be to try each of the 3*3*3*3=81 combinations for the edge of the paper sheet, intersect them 2-by-2, and draw the resulting 4-points polyline on a canvas, then only keep the pixels of the original image that are part of these lines by drawing the original canny-edge image on top of the temporary canvas containing the candidate polyline with a globalCompositionOperator of ‘multiply’, then count the amount of white pixels in the remaining image.

The solution among the 81 that has the most white pixels, as indicated by the count, is likely to be the one we are looking for. This favors bigger rectangles, which is what the expect to find.

In this case, this would have been sufficient to eliminate the erroneous vertical-left line because most of its pixels are not part of the rectangles that would have been formed.

That’s it. You can read the sample code here: https://github.com/FremyCompany/hough-transform/.

You can also view the running demo there: https://rawgit.com/FremyCompany/hough-transform/master/index.html.